Over seven months, I worked with a client to define a use case and prototype a co-adaptive interaction to make creative software easier to use. See the product website here.

SKILLS

synthesis // affinity diagramming, customer journey map, visioning

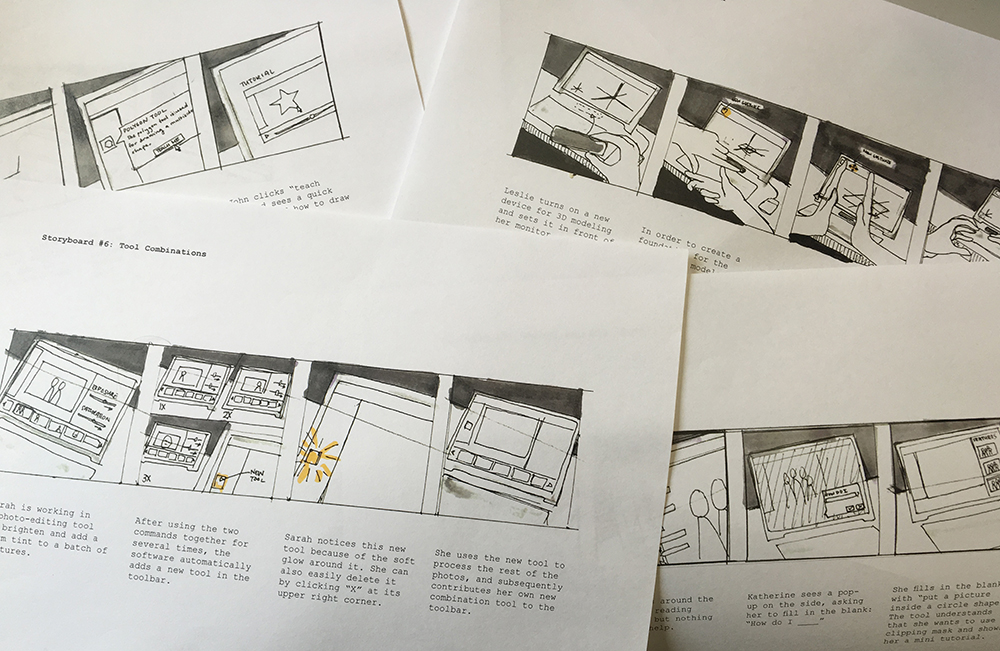

prototyping // storyboarding, low to high-fidelity prototyping, usability testing, heuristic evaluation

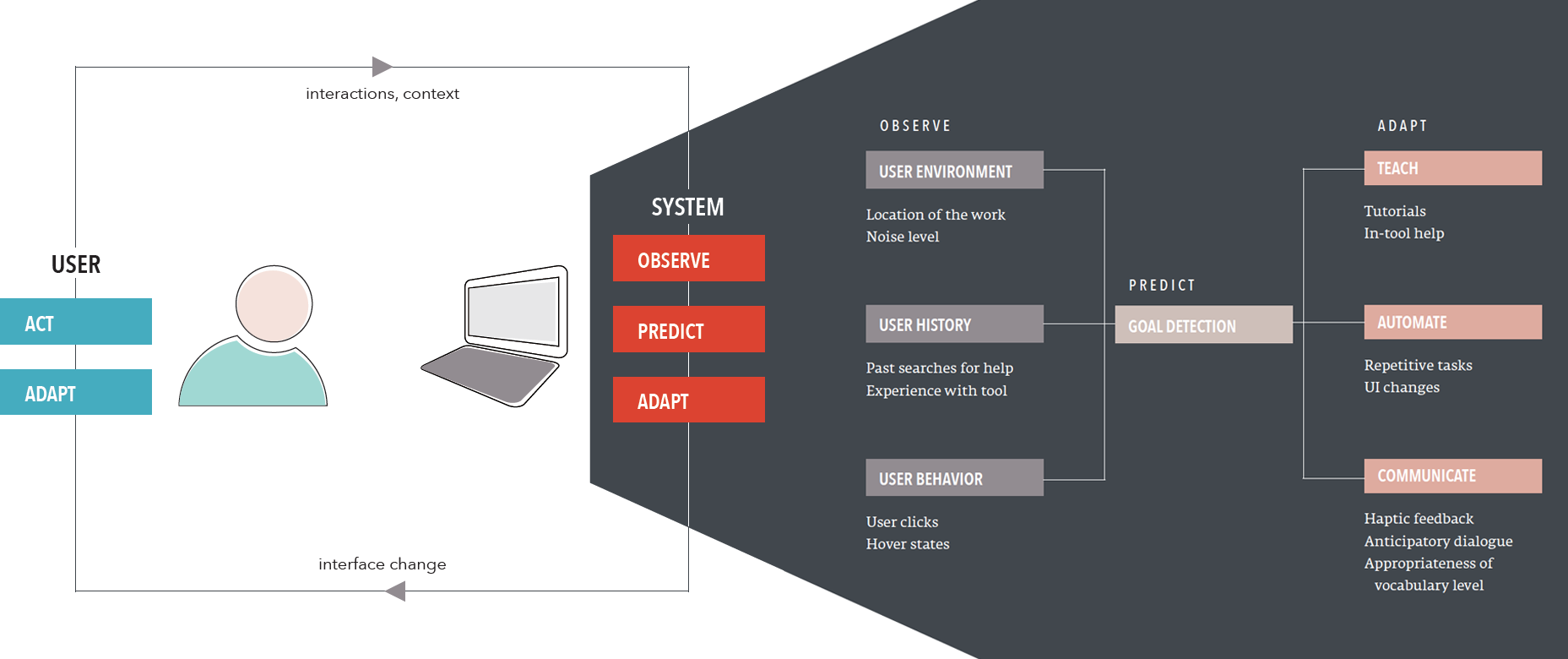

WHAT IS A CO-ADAPTIVE INTERACTION?

LAYING THE GROUNDWORK

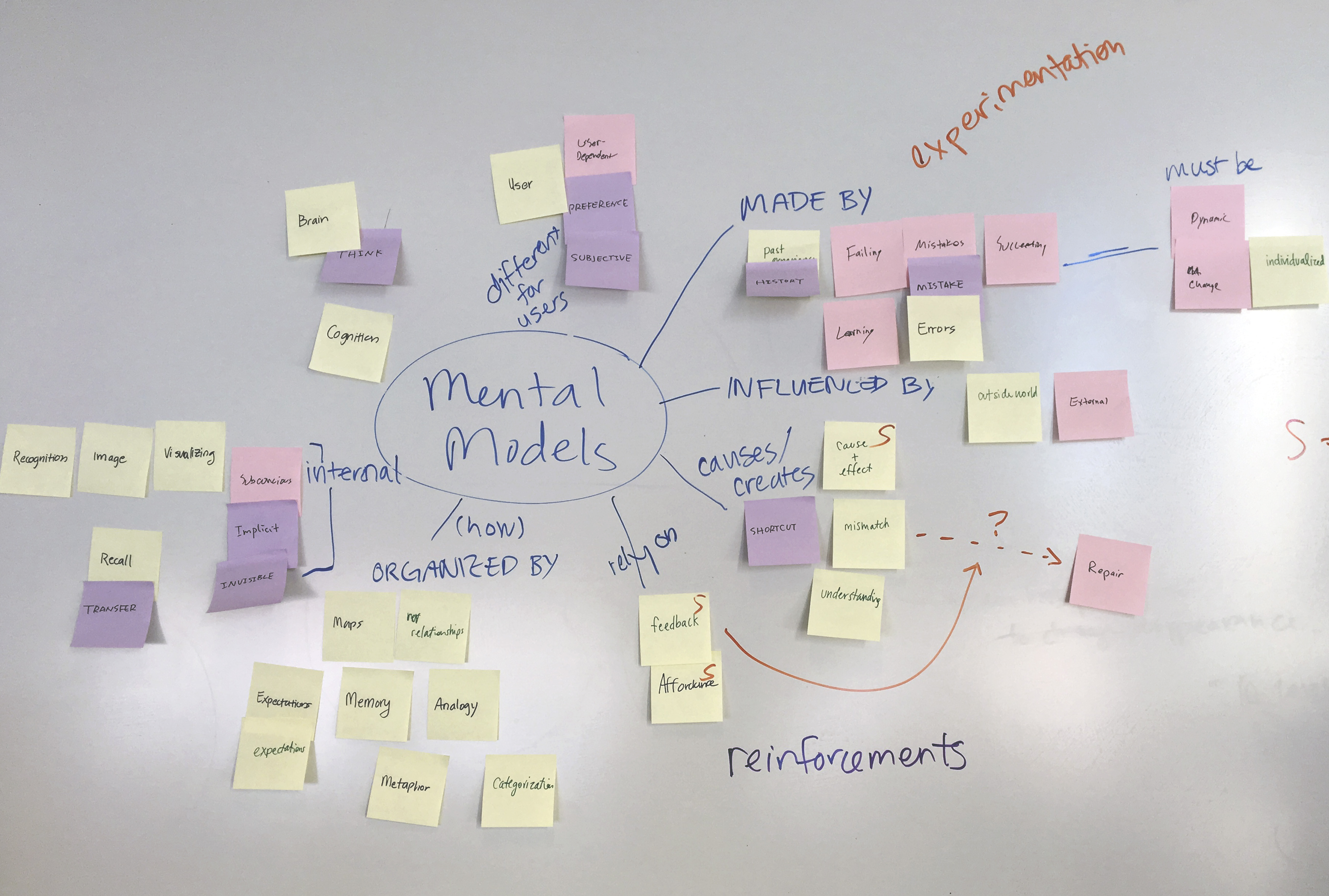

From week 1, I pushed to develop a rigorous, reusable co-adaptive framework to structure our prototypes within. This early decision greatly influenced our ability to think systematically and holistically throughout the research phase.

MY ROLE

strategy & vision // I defined the insights that emerged from several months of research. As a generalist, I pushed for a high-level, conceptual understanding of the problem space.

product // I defined the workflow and helped scope the product features of our high-fidelity prototype.

misc // I worked on all aspects of the project and played other vital roles as a researcher, project manager, and content strategist. On any given day, I might be writing, analyzing data, and coordinating meetings—while conducting user tests or ideating in between.

UNCOVERING THE UNDERLYING PROBLEMS OF CREATIVE SOFTWARE

While we employed traditional user research methods, stepping out of these boundaries to explore analogous domains also inspires design ideas. For instance, we played games like Minecraft to experiment with wildly different interfaces. We talked to dancers, yoga instructors, and climbers. We taught a teammate how to drive while acting as a co-adaptive interface.

WHAT WE DISCOVERED

Goals, prior knowledge, and context are not isolated factors. Their interplay creates a unique user profile. Any system that attempts to classify users by a single factor (e.g., novice vs. expert) will miss the inherent nuances at play in every interaction.

Users turn to online and in-person interactions for help because they are dynamic, personal, and engaging. They watch tutorials and read forums for personalized help. How can we bring the elements of conversation into the tool when users need help?

Users tend to search for help based on the task rather than the feature. But if users don’t know what feature to use to complete their task, how can they use the correct terminology to search for the feature?

Users only relinquish control and accept changes after they build trust with the software. By adopting more incremental and salient changes, software can set up a pattern of successful interactions.

The states in which a user learns, gains skills, and fine-tunes are necessary to the creative process. However, creative struggle ≠ struggle with the tool. When users grapple with complex features, there is an opportunity to refocus them on their creative work.

What users fundamentally seek—and what is missing in their creative software—is a human element of teaching, sharing, and creating.

OUR VISION

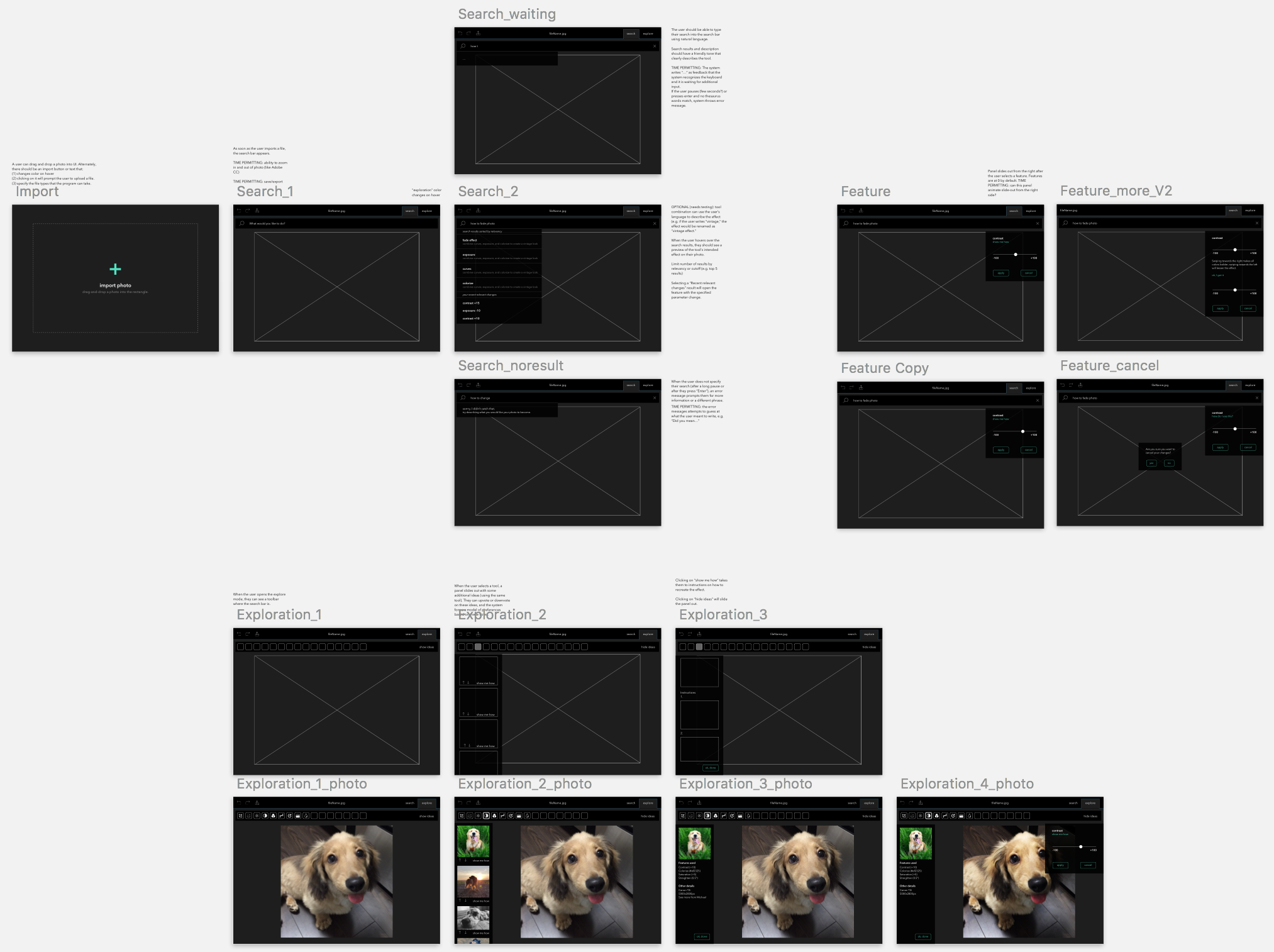

Given only two months to build and test a prototype, we scoped our product to be a simple photo-editor. However, we envision that more robust creative software, with a larger featureset, will only benefit more from our interface implementation.

Our conceptual photo-editing software includes two modes:

(1) Search mode allows users to search for a task or workflow using their natual language. The system learns their language and adapts to it by prioritizing the search results (features and workflows) based on the user's mental model. On the backend, we use Mechanical Turk to crowdsource the potential language of the user and populate a thesaurus of search terms. Instead of requiring an exact feature name, like "Curves tool," the user can type in "I want a hazy effect" to see results.

(2) Explore mode provides users with the toolbar that they're used to. By selecting a feature, they can view a panel of photos that other people have edited with that feature. Our users repeated told us that they look to other creatives for inspiration. By bringing this inline with the tool, the user can browse inspiration and learn about feature combinations that others have used to capture the effect that they want.

Part of our workflow for a smart, simplified, minimal photo-editing interface.

MY EXPERIENCE